The last article focused on combining organisational data with ChatGPT and Large Language Models, specifically using Microsoft’s 'Azure OpenAI on your data' accelerator which is designed to simplify this. I’ve been focused on the general area of 'AI with your data' (though not the AOI accelerator specifically) for a while now with colleagues, and I don’t think it’s any exaggeration to say that combining generative AI and organisational data will be a big thing for the next few years. The results can be astonishing – we all know what ChatGPT is capable of, but seeing it answer questions and generate content related to an organisation’s clients, products, services, people, and projects rather than its original internet training data immediately shows huge value – providing a “second brain” for employees and supporting many use cases at work. Platform solutions like Microsoft 365 Copilot offer amazing capabilities for core collaboration and productivity, but building your own AI and data solution (often to supplement Copilot) using available building blocks is often the way to go for better results with your data.

The overall message from my last article (Integrating your data with ChatGPT - exploring Microsoft's "Azure OpenAI on your data" accelerator) was that the tool is a useful accelerator in some respects, but in reality only gets you so far in terms of what you probably need. For AI that gives relevant, accurate, and transparent responses to prompts and queries for real world use cases, the implementors need to understand concepts such as retrieval augmentation (RAG), chunking, vector generation, and more. There are various ways to slice this but here’s one way of thinking of the top-level considerations:

All of these concepts are inter-related.

This article tries to help you understand each in more detail, sharing info on our approach and technology selection (for Microsoft-centric solutions) as well as some lessons learnt. I'll finish with some predictions on where the space is going and what I believe will remain important.

Data platform for Retrieval Augmented Generation

Retrieval augmentation has emerged as a key concept for combining generative AI with data, representing arguably the first thing to learn about the space. I summarised it in the “RAG and other concepts” section of the last article, so if the concept is new to you, the three bullet points outlined there may help.

In order to be able to do RAG, you need a platform for your data and it may not be the one where it is currently held. A likely scenario is that data you wish to integrate with AI is spread across multiple platforms rather than conveniently batched up in one place anyway – in our clients, organisational knowledge is often spread across documents in Microsoft 365 (Teams and SharePoint), various data sources in Azure (e.g. structured data in Azure SQL or Cosmos DB, files in Azure BLOBs, perhaps some other flavours too), and some single-purpose SaaS applications. While you *may* have some success going directly to a myriad of platforms like this, there are two fundamental reasons why it’s likely to be difficult:

- The data in its native form will not be suited to AI – it will not be chunked or represented as vector embeddings, meaning that poor answers are likely to be returned due to issues with relevance and similarity search (both needed by generative AI)

- Establishing which data source to go to when (across all of the prompts and queries your users might enter) is likely to be difficult, especially when results should be returned in seconds – similarly, responses which combine data from multiple sources will be a challenge if you’re hopping across them

So, what’s often needed is a vector database which also acts as a data aggregation point. This allows you to run one retrieval operation across the right kind of data for AI, where data from your various sources has already been brought together and converted to embeddings. We favour Azure Cognitive Search in our solutions today since it has lots of connectors, a ready-made indexing platform, and support for vector storage, but as discussed last time many vector database options have sprung up in the AI era - from dedicated vector DBs such as Pinecone, Qdrant and Weaviate, to additions to existing technologies like Azure Cosmos DB (MongoDB flavour), Databricks, and Redis. Microsoft promote Azure Cognitive Search for generative AI applications and it does have some fairly unique capabilities, but we regularly review options in this fast-changing space.

See the “Generating vector embeddings” section for more on what vectors are why they’re needed in AI solutions.

Expertise in your RAG platform of choice is key – and you may need to bring in support or consultancy if Azure Cognitive Search (or your chosen vector database) isn’t a common skill today.

Chunking

Chunking refers to the practice of splitting long documents which go beyond the limitations of prompt size, e.g 4000 or 32000 tokens for GPT-4 (a token is around 4 characters of text). Remember that RAG is all about retrieving some data/information to give to the AI in a big long prompt, but the limitations we have today mean that a long document will never fit into the prompt in its entirety. What we need is for the most relevant part(s) of the document to be passed to the AI – and that means the documents need to be split into chunks in the RAG data platform. Additionally, models used to generate vectors have similar limits on the maximum input, so chunking is needed both for storing your data in the right format as well as retrieving it. The cut-off point for a chunk is equivalent to around 6000 words if you’re using the Azure OpenAI embeddings models for vector generation, so chunks need to be smaller than that. You can split your documents into:

- Fixed-size chunks

- Variable chunks

- A hybrid, with some special chunking strategies added (e.g. to deal with specific formats in your documents like tables in PDFs or smart art)

In our experience, that last point needs some special thought - I expand on it in the later section on “Content tuning”.

Getting chunking right is vital. I speculated in the last article that some of my poor results with the “Azure OpenAI on your data” accelerator were due to inadequate chunking - there is a chunking mechanism in there, but it’s not used under all circumstances and the parameters used in the chunking script may not have suited my data.

In terms of existing tools to help you implement chunking, there are various scripts and options out there. The LangChain splitter is a common one and Semantic Kernel, Microsoft’s AI orchestrator library, also has one. Whatever script or approach you use, in most cases you’ll need to integrate it into your indexing/ingestion pipeline so that as documents and data change and need to be re-indexed, the chunking and other steps happen automatically. More on this in the “Content ingestion/indexing” section.

The following Microsoft documentation is a good read on chunking and related considerations:

Chunk documents in vector search - Azure Cognitive Search | Microsoft Learn

Generating vector embeddings

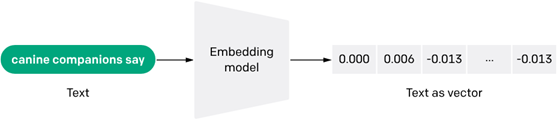

As touched on earlier, in most cases your AI solution will be much more powerful if your data is converted from its original form (e.g. text in a document) to embeddings. This allows concepts like similarity search, which is where much of the power of ChatGPT and generative AI comes from, in particular the feeling that the AI understands what you’re asking for regardless of the exact words you used. Most classic search solutions rely on keyword matching - a search for the word "dog" will only get results containing “dog". However, cats are somewhat related to dogs - and both are related to household pets. When your information is represented as embeddings, these semantic links and relationships can be understood – enabling AI solutions which use search, classification, recommendations, data visualization and more. The approach can work not just across text, but across other content types like images, audio, and video – different content types can all be converted to embeddings, enabling interesting scenarios like finding images and video related to concepts discussed in a conversation or document.

Embeddings are created by passing your data (e.g. text inside a document) into an AI model which returns the information as embeddings, i.e. arrays of numbers. OpenAI have this image which nicely represents the process:

Most solutions using Azure OpenAI will generate their embeddings using a model behind the Embeddings API e.g. text-embedding-ada-002. New versions get created as models evolve, and since these use different weights/measures internally the format is different, some care is needed that your embeddings generation matches the AI model you’re querying/prompting against.

AI orchestration

When developing AI applications, it quickly becomes apparent that some middleware is needed to do some of the heavy lifting of storing data and calling plugins. LangChain emerged as a popular open source library for this, followed by Semantic Kernel as a Microsoft equivalent. Semantic Kernel provides quite a few valuable functions:

- Connectors to vector databases – including Azure Cognitive Search, but also Azure PostgreSQL, Chroma, Pinecone, Qdrant, Redis, Sqlite, Weaviate and a couple of others

- A plugins model – allowing you to call out to other apps and systems from the conversation the user has with the AI. If you heard about ChatGPT plugins (e.g. those for Expedia, Zapier, Slack etc.) then this is the SK equivalent – and since the model provides an abstraction over different plugin architectures, both OpenAI and Azure OpenAI plugins can be used. Importantly, SK also provides some ready-to-go plugins, allowing you to do some common operations easily – calling out to a HTTP API, doing file IO, summarising conversations, getting the current time etc., and also doing some things LLMs aren’t suited to such as math operations

- Memories – context is crucial in generative AI. The AI needs to understand things previously said in the conversation, so the user can ask contextual questions like “Can you expand on that?” Additionally, SK provides the concept of document memories, enabling the AI to have context of a particular document the user is working with closely. In this case, SK does the work of generating vectors embeddings for documents (e.g. those uploaded by the user in a front-end app), thus joining up several of the concepts discussed here

The real power of orchestration comes with chaining plugins and functions together in both predetermined and non-predetermined ways. In the latter case, we are allowing the LLM to decide how best to use a set of additional capabilities to meet a certain goal i.e. a request made by a user in a prompt which is extremely interesting. For this to be effective, functions need to be described well so that the AI can decide whether they will be useful. The concept of giving the AI agency to decide which tools from an extended toolkit may be useful for a given task (i.e. beyond what it was initially trained on) has huge potential for organisational use. Consider an insurance company offering home/car/pet insurance policies to a large client base – with the right set of plugins, it would be possible to make complex requests in prompts such as:

“Find all clients with a total annual contract value in the bottom 50%, and for each generate a personalised e-mail recommending policy extras not currently taken. Upload the draft e-mails to SharePoint and post a summary of client numbers and key themes to the ‘Client Retention’ team in Microsoft Teams to allow review”.

Such a request could simplify a complex data analysis, content generation, and approval exercise massively, not only reducing effort and cost but potentially bringing in new revenue through the campaign results. The capability is ground-breaking because we are able to approximate human work – taking a fairly open-ended input and establishing the process and tools to get to an outcome, perhaps via certain milestones. This is generative AI supporting automation within the workplace, leveraging GPT’s ability to process data, identify anomalies, establish trends, generate content, and take action via plugins.

Semantic Kernel is particularly strong in this, with several planner types offered to suit different “thinking approaches”. Simple cases will use the ActionPlanner, with more complex multi-step processes using one of the others:

See the planner capability in the SK docs for more info.

Content tuning

In the Chunking section earlier I touched on some of the complexities of chunking for specific content, such as tables in PDF files. Attention needs to be paid to what’s IN the files you are working with – not all content is created equal, and text in paragraphs is more easily understood by AI than tables, graphs, and other visualisations. Some of the specific examples we’ve run into where the AI did not initially give great answers on include:

- Tables (in both PDF and Office docs), especially:

- Long tables spanning multiple pages

- Tables where some rows are effectively a “sub-header row”

- Scanned/OCR’d documents where the content is effectively an image

- HTML content

- Images

- Smart art

- Document structure elements (headings, subheadings etc.) which convey semantics

We needed to take specific steps to deal with such content, and as mentioned in the last article I think it’s where accelerators like Azure OpenAI on your data can run out of steam. For a production-grade AI platform, you’ll need to establish what you need to solve for in this area and prioritise accordingly - there’s almost no upper limit to how much tuning and content optimisation you could implement. Note also that while I label this “content tuning”, the tuning actually takes place in your platform mechanisms – your content ingestion pipeline and the chunking script/code most specifically. You’re not changing content to suit the AI, because the business will create content as the business needs to. That said, one tactic for special content may to index a modified version of a file rather than the original – so long as you have a mechanism for ongoing ingestion of content created by the business.

So what are the specific steps you might need to take? A possible toolbox here includes:

- Modifying chunking to recognise long tables and adopt tactics such as:

- Create a larger chunk than normal so the entire table fits into one

- Ensure the table header is repeated every time the table is split

- Implementing ‘document cracking’ (aka document understanding) using something like:

- Microsoft Syntex – perhaps to leverage its extraction capabilities with important values inside documents (e.g. contract value, start date, end date, special clauses etc.); this can ensure vital details are indexed properly

- Azure AI Document Intelligence – similar to Syntex, using the Layout model allows you to crack PDFs or images to text, even if it’s a scanned document where the content is actually an image

Both of those document cracking approaches (Syntex and Azure Document Intelligence) allow tables to be processed since they understand headers, rows and columns.

In cases where high value information is expressed in such constructs, be ready to spend time in this area gradually tuning and improving the AI’s understanding of the content. To close, perhaps considering the image helps convey why gen AI needs help in this arena:

Content ingestion/indexing

All the previous aspects need to be worked into an indexing pipeline of some sort which can continually ingest data from source platforms - the only exception would be if you’re creating a simple solution based on a one-time upload of some static content, which is certainly more straightforward. Most scenarios, however, require generative AI to work against continually changing data (e.g. new and changing documents in Microsoft 365), and this means ensuring all of your steps to support RAG - in terms of content processing, chunking, embeddings generation and so on - are called as part of an automated pipeline.

But what triggers the process? You could run on a scheduled basis, but in many cases you can piggyback onto existing content indexing mechanisms which may be scheduled or based on detection of content changes. Another benefit of Azure Cognitive Search as the RAG platform is the support for indexers (see the list of connectors above). In our solutions, to bring GPT capabilities to documents stored in Microsoft 365 we use the SharePoint indexer in Cognitive Search to do the initial gathering, but extend using skillsets to integrate document cracking, chunking, embeddings generation and other steps into the ingestion process. A few considerations come with this, including:

- The SharePoint indexer is still in preview at the time of writing

- ACS has certain thresholds of how many indexes and indexers you can have – this varies based on pricing tier, but needs consideration when indexing at scale

- The SharePoint indexer doesn’t currently deal well with some content scenarios such as deletions and folder renaming – this can lead to content staying in your gen AI platform when it shouldn’t, and missed content and/or broken links in citations

On the second point, our team have needed to augment the indexer to deal with these shortcomings. On the first, we have some views on challenges Microsoft might be running into with the SharePoint indexer (consider ACS ingesting a Microsoft 365 tenant with 30+ TB of data for example) – and we hope this isn’t one of those cases where Microsoft tech gets pulled without even making it out of preview. Having Cognitive Search index documents in SharePoint is a common scenario for many reasons, not just generative AI – leaving the world to create their own indexing mechanisms would take away a big value-add for Microsoft’s premier search technology.

Summary

Today, there’s no such a thing as a genuine turn-key platform for “generative AI on large amounts of your organisational data across different platforms”. On a related note, Microsoft 365 Copilot is amazing for many scenarios (and we had early exposure through the limited Early Access Program), but it’s not the answer to every generative AI use case. Sure, data from other platforms can be integrated via Copilot plugins, but in my view the pattern is better suited to small scale ‘callouts’ to the other systems (e.g. read or write a record) – this isn’t quite the model for “ingest TB of data from different company platforms to work with gen AI”.

However, with a talented team (or partner), such platforms can be built in a few weeks or months depending on your scope, and many parts of the stack will come from assembly of building blocks which exist already. Without a doubt, lots of the challenges above will be abstracted further in the next year or two – but at the same time, I’ll be surprised if Microsoft or anyone else cracks all parts of the puzzle in a way that works for everyone. Some elements will always be organisation-specific, and priorities will vary. Cost will always be a factor too – budgets will be found for AI projects demonstrating a path to return, but no-one wants to license a hugely expensive product only to find it can’t be easily configured or extended to work with company apps, data, and processes.

Similarly, no-one wants to spend a year building a platform because the team didn’t know what they were doing or weren’t following developments closely. Being plugged in to the firehose of generative AI changes is vital to avoid missteps and wasted effort. For implementors, I feel this is the new web development or the new databases - solutions of immense value can be built, so relevant expertise will be in demand. Following a series of “AI hacks” and client projects this year, I’m feeling good about how we’re shaping up at Content+Cloud/Advania to respond to this new era.

More fundamentally, the results we’re seeing from combining GPT (via Azure OpenAI) with our client’s organisational data are hugely encouraging and show the power of generative AI in the workplace. Seeing the AI perform reasoning and answer deep questions over organisational data which came from different platforms and in different formats provides a vision into how AI will power organisations and how work will get done over the next few years. As I keep saying, it’s a magical time.