In my last post I introduced my Language Store solution for multi-lingual SharePoint sites, and showed the two ways it can be used:

In standard .Net procedural code:

string sButtonText = LanguageStore.GetValue("Search", "SearchGoButtonText");

Declaratively in HTML:

<asp:Button runat="server" id="btnSearch" Text="<%$ SPLang:Search|SearchGoButtonText %>" />

This declarative syntax is very useful as it means the developer doesn't have to clutter up code-behind files just to call the method to retrieve a value, then assign it to the 'Text' property of various controls. I've also retrofitted this to my Config Store solution (along with some other enhancements) and this will be available on Codeplex soon. You might notice it's the same syntax as the SPUrl token which can be used in master pages/page layouts to get a relative path to an image or CSS file, and that's because I'm using the same .Net technique. Since I had to do some digging to work out how this was done, I'm guessing (could be wrong here!) many other developers haven't come across this either, so here's how it's done.

Implementing an expression builder class

An expression builder is essentially a class which derives from System.Web.Compilation.ExpressionBuilder and contains logic to evaluate an expression at page parse time. The 'secret' is that the ASP.Net page parsing engine understands that it needs to call the class's method whenever it encounters an expression in the appropriate form. These are the things that join this mini-framework together:

- Class derived from ExpressionBuilder which overrides the EvaluateExpression() and GetCodeExpression() methods

- Declaration in web.config which associates your prefix ('SPLang' in my case) with your expression builder class

- Optional use of ExpressionPrefix attribute on class for designer support

- An expression in declarative HTML (as per the example above)

Taking things step-by-step, here's what my class looks like:

[ExpressionPrefix("SPLangStore")]

public class LangStoreExpressionBuilder : ExpressionBuilder

{

private static TraceSwitch traceSwitch = new TraceSwitch("COB.SharePoint.Utilities.LanguageStore",

"Trace switch for Language Store");

private static LangStoreTraceHelper trace = new LangStoreTraceHelper("COB.SharePoint.Utilities.LangStoreExpressionBuilder");

public static object GetEvalData(string expression, Type target, string entry)

{

trace.WriteLineIf(traceSwitch.TraceVerbose, TraceLevel.Verbose, "GetEvalData(): Entered with expression '{0}'.",

expression);

string[] aExpressionParts = expression.Split('|');

string sCategory = aExpressionParts[0];

string sTitle = aExpressionParts[1];

if ((aExpressionParts.Length != 2) || (string.IsNullOrEmpty(sCategory) || string.IsNullOrEmpty(sTitle)))

{

trace.WriteLineIf(traceSwitch.TraceError, TraceLevel.Error, "GetEvalData(): Unable to parse expression '{0}' into " +

"format 'Category|Title' - throwing exception.",

expression);

throw new LanguageStoreConfigurationException("Token passed to Language Store expression builder was in the wrong format - " +

"expressions should be in form Language Store Category|Item Title e.g. Search|SearchGoButtonText");

}

string sValue = LanguageStore.GetValue(sCategory, sTitle);

trace.WriteLineIf(traceSwitch.TraceInfo, TraceLevel.Info, "GetEvalData(): Retrieved '{0}' from Language Store.",

sValue);

trace.WriteLineIf(traceSwitch.TraceVerbose, TraceLevel.Verbose, "GetEvalData(): Returning '{0}'.",

sValue);

return sValue;

}

public override object EvaluateExpression(object target, BoundPropertyEntry entry,

object parsedData, ExpressionBuilderContext context)

{

return GetEvalData(entry.Expression, target.GetType(), entry.Name);

}

public override CodeExpression GetCodeExpression(BoundPropertyEntry entry,

object parsedData, ExpressionBuilderContext context)

{

Type type1 = entry.DeclaringType;

PropertyDescriptor descriptor1 = TypeDescriptor.GetProperties(type1)[entry.PropertyInfo.Name];

CodeExpression[] expressionArray1 = new CodeExpression[3];

expressionArray1[0] = new CodePrimitiveExpression(entry.Expression.Trim());

expressionArray1[1] = new CodeTypeOfExpression(type1);

expressionArray1[2] = new CodePrimitiveExpression(entry.Name);

return new CodeCastExpression(descriptor1.PropertyType, new CodeMethodInvokeExpression(new

CodeTypeReferenceExpression(base.GetType()), "GetEvalData", expressionArray1));

}

public override bool SupportsEvaluate

{

get { return true; }

}

}

If you're wondering why two methods are required, it's because GetCodeExpression() is used where the page has been compiled and EvaluateExpression() is used when it is purely being parsed. My code follows the MSDN pattern which supports both modes and uses a third helper method (GetEvalData()) which both call into. It's this GetEvalData() method which does the work of parsing the passed expression and then using it to obtain the value - in my case the expression is the 'category' and 'title' of the item to fetch from the Language Store. Notice that effectively, the key line in all of that is one line in GetEvalData() which calls my existing LanguageStore.GetValue() method - so effectively my expression builder is just a wrapper for this method.

My web.config entry looks like this:

<add expressionPrefix="SPLang" type="COB.SharePoint.Utilities.LangStoreExpressionBuilder, COB.SharePoint.Utilities.LanguageStore, Version=1.0.0.0, Culture=neutral, PublicKeyToken=23afbf06fd91fa64" />

And finally here's how the component parts of the expression get used:

For SharePoint solutions, assuming we're deploying our code as a Feature/Solution we'd generally want to add the web.config entry automatically by way of the SPWebConfigModification class. You can find the code to do this in on Codeplex in the source code for my Language Store solution (in the Feature receiver).

Finally, if you're building an expression builder and this information doesn't get you all the way, the MSDN documentation for the ExpressionBuilder class has some additional details.

Enhancing the design-time experience with a custom expression editor

This is something I haven't looked at yet, but looks extremely cool! If the standard expression builder stuff wasn't convenient enough for you, you can extend things further by providing a custom 'ExpressionEditor' for use in Visual Studio. If I understand the possibility correctly, this can provide a better experience in the VS properties grid in two ways:

- a custom editor sheet (e.g. a dialog to enter the 'category' and 'title' of the Language Store item - let's say 'Search' and 'SearchGoButtonText' was entered respectively, this would 'build' the string in the correct delimited 'Search|SearchGoButtonText' form required)

- a custom picker using the Expressions collection - this could (I think) be used to query the Language Store list and display all the items, so that selecting the item to display the translation of is as simple as a few clicks, no typing!

I'd absolutely love to implement this for the Language Store/Config Store - so I might return to this at a later date!

Conclusion

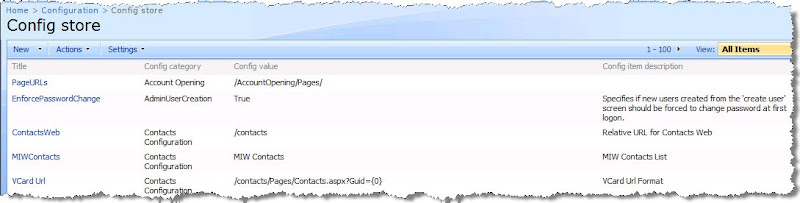

Expression builders provide a powerful, clean way to inject method calls into your markup. In most cases we're used to seeing them return strings as in my Language Store/Config Store implementations, and note that the following implementations are also present in the .Net framework:

However, one final thing to bear in mind is that the signature of the method returns an object - so theoretically it should be possible to do a whole host of other things, where the processsing returns a more complex object which gets assigned to the control property. An example could be data-binding scenarios where your method returns something which implements IEnumerable/IList - this could then be assigned to the DataSource property of your control declaratively. You might have other possibilities in mind, but hopefully that's food for thought ;-)